Abstract

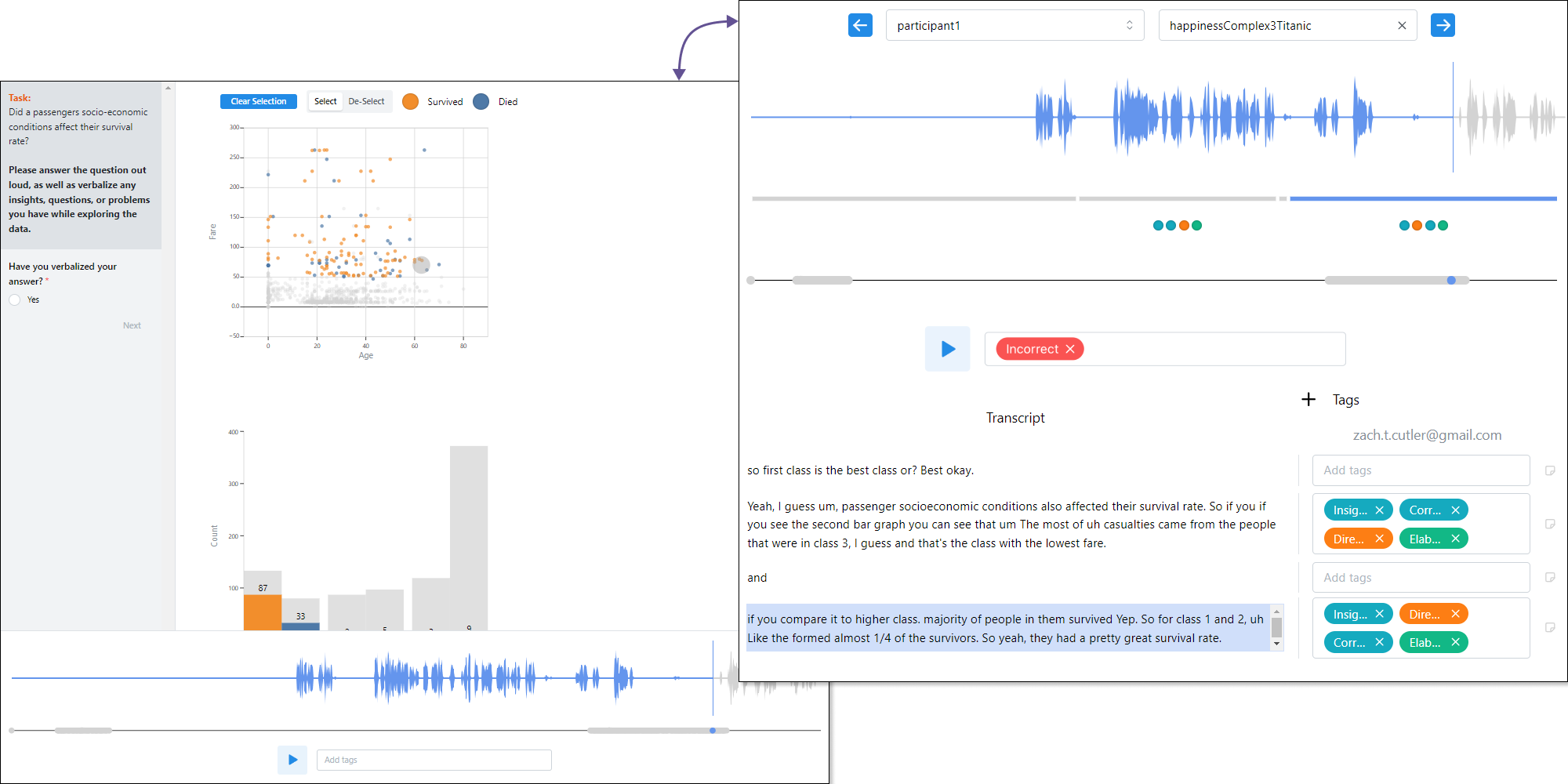

The think-aloud (TA) protocol is a useful method for evaluating user interfaces, including data visualizations. However, TA studies are time-consuming to conduct and hence often have a small number of participants. Crowdsourcing TA studies would help alleviate these problems, but the technical overhead and the unknown quality of results have restricted TA to synchronous studies. To address this gap we introduce CrowdAloud, a system for creating and analyzing asynchronous, crowdsourced TA studies. CrowdAloud captures audio and provenance (log) data as participants interact with a stimulus. Participant audio is automatically transcribed and visualized together with events data and a full recreation of the state of the stimulus as seen by participants. To gauge the value of crowdsourced TA studies, we conducted two experiments: one to compare lab-based and crowdsourced TA studies, and one to compare crowdsourced TA studies with crowdsourced text prompts. Our results suggest that crowdsourcing is a viable approach for conducting TA studies at scale.

Citation

Zach Cutler,

Lane Harrison,

Carolina Nobre,

Alexander Lex

Crowdsourced Think-Aloud Studies

SIGCHI Conference on Human Factors in Computing Systems (CHI), 1-23, doi:10.1145/3706598.3714305, 2025.

BibTeX

@inproceedings{2025_chi_crowdaloud,

title = {Crowdsourced Think-Aloud Studies},

author = {Zach Cutler and Lane Harrison and Carolina Nobre and Alexander Lex},

booktitle = {SIGCHI Conference on Human Factors in Computing Systems (CHI)},

publisher = {ACM},

doi = {10.1145/3706598.3714305},

pages = {1-23},

year = {2025}

}

Acknowledgements

We thank Max Lisnic for help with Study 2 and the reviewers for their constructive feedback throughout the revision process, which helped substantially strengthen the manuscript. This work is supported by the National Science Foundation (NSF 2213756, 2213757, and 2313998).